Launching Onoma alpha: building AI memory that actually works

We're launching Onoma, an AI memory layer that works across every model. Why we built it, why it's European-only, and what's next for Ryde's first venture.

Some products solve problems you didn't know you had. Others solve problems you've been silently frustrated by for months.

Onoma is the second kind.

Today, I'm excited to share that we're launching Onoma into alpha. It's the first product to come out of Ryde Ventures, and it represents everything we believe about building AI products: solve real problems, ship fast, and listen to users.

The problem nobody talks about

Let's be honest about how we use AI.

We dump everything into it. Contracts. Company strategies. Investor updates. Relationship frustrations. Health concerns. The stuff we'd never put in a Google search.

Convenience wins over privacy. Every time. We know this, and we do it anyway.

And AI rewards us for it. The more context it has, the better it understands us. The better it helps. So we keep feeding it more.

But here's what's uncomfortable: even when you're paying for the service, these companies can still train on your data. And what do they actually know about you? Try finding out. ChatGPT and Claude let you export your conversations, maybe see a brief summary of what they've learned. But the actual stored context they've built about you? You can't access it. You can't control it. You can't delete specific parts of it.

Your context is trapped inside each provider. And you have no idea what they're doing with it.

Then there's the switching problem. ChatGPT knows your projects. Claude knows your preferences. Gemini knows your work style. But none of them talk to each other. Switch providers? Start from zero.

Platform lock-in meets privacy black hole. And once you notice it, you can't unsee it.

What Onoma actually does

Onoma is an AI memory layer. One place where your context lives, shared across every model you use.

Think of it like this: instead of having separate conversations with Claude, GPT, Gemini, and Grok, you have one ongoing relationship with AI. Onoma remembers your projects, your preferences, your decisions. When you switch models, your context follows.

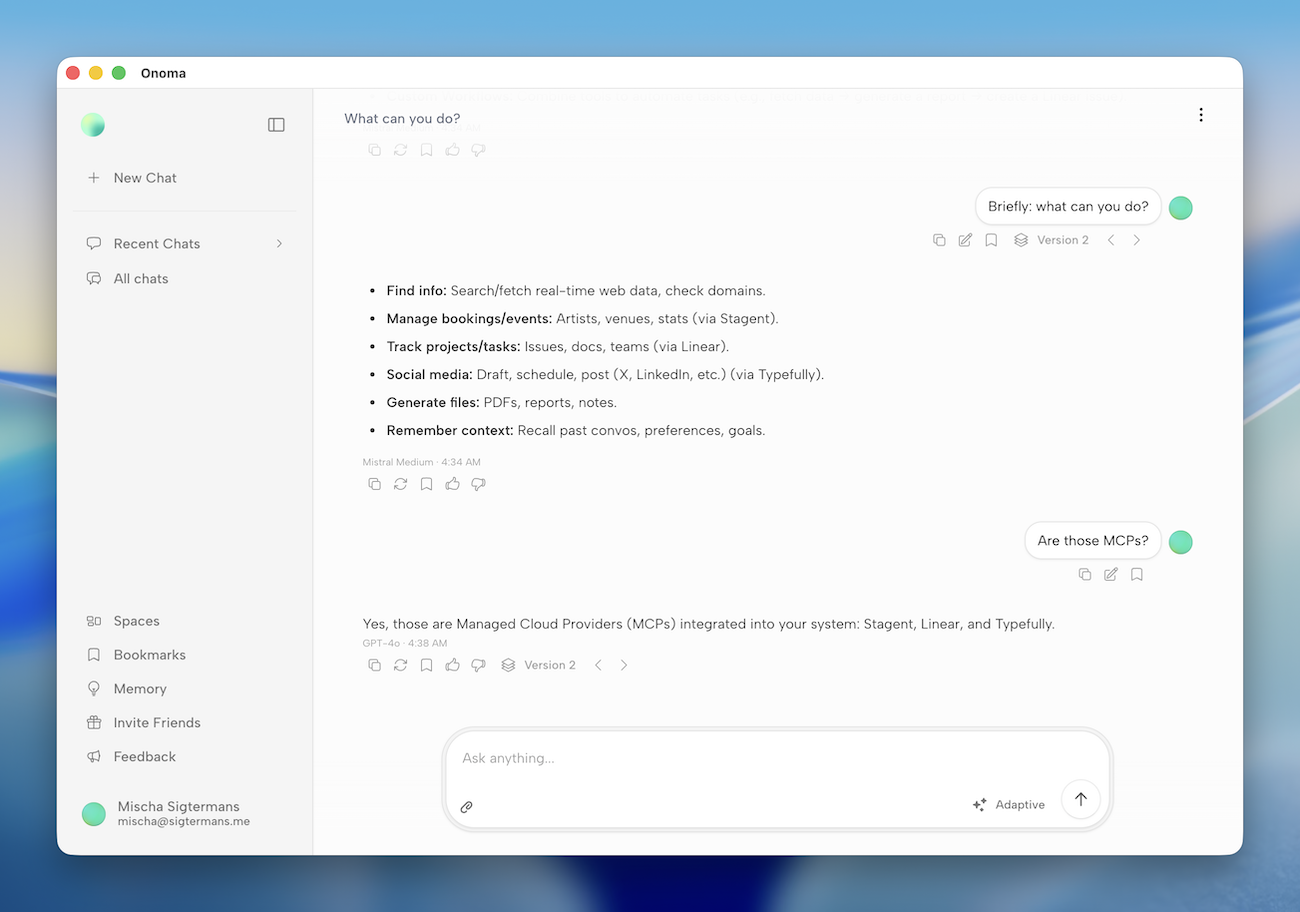

Right now in alpha, Onoma offers:

- Shared memory across 14 models from 7 providers (OpenAI, Anthropic, Google, xAI, Groq, Mistral, and more)

- Intelligent Spaces that automatically organize your conversations by context, not by arbitrary folders

- Adaptive routing that picks the right model for your question

- Instant model comparison so you can ask the same thing to different models and see what works best

- Document integration for uploading and generating files that become part of your searchable context

- MCP connections to your favourite external tools and data sources

The core insight is simple: your AI memory shouldn't be locked inside one platform. It should be yours. Visible. Controllable. Portable. And protected.

Why European-only matters

We made a deliberate choice with Onoma: European data residency, GDPR compliance from day one, and a focus on privacy that goes beyond checkbox compliance.

This isn't marketing. It's a product decision.

When we designed Onoma's architecture, we built a middleware called Cortex. It processes your messages locally before they reach external AI models. It anonymizes personally identifiable information. It encrypts your data. Your memories never leave the EU.

I've seen too many products treat privacy as an afterthought, something to patch in later when regulations catch up. We didn't want to build that way. Onoma handles sensitive context, your work, your projects, your preferences. That data deserves real protection.

For now, we're focused on European users who share these values. The product works better when we can be opinionated about how we handle data.

Ryde's first venture

Onoma is the first product to come out of Ryde Ventures. That matters for a few reasons.

When I joined Ryde as Partner and CPO, we talked about what AI-first product building actually looks like. Not just adding AI features to existing products, but building from the ground up with AI as the foundation.

Onoma is exactly that. We didn't start with a traditional product and ask 'how do we add AI?'. We started with a real problem, AI context fragmentation, and built the simplest solution that works.

We're not building in isolation. We use Onoma ourselves, daily. Every friction point we hit becomes a feature discussion. Every 'why doesn't it do this?' becomes a ticket.

This is what building at a venture studio looks like: close collaboration, fast decisions, real usage driving the roadmap.

What's working right now

The alpha is live. You can sign up at askonoma.com.

Here's what's available today:

- Free tier: 50K tokens per month, access to 8 models, full memory features

- Ambassador plan: €9/month for unlimited tokens and all 14 premium models

We're deliberately keeping the scope focused. Memory that works. Model switching that's seamless. Organization that happens automatically.

There's a waitlist for the free tier, but Ambassadors get instant access. We wanted to reward early believers while we scale infrastructure.

What's next

Alpha is just the beginning. Here's what we're building toward:

Better memory retrieval. The current system is good. We want it to be invisible. You should never think about what Onoma remembers. It should just know. More integrations. MCP (Model Context Protocol) support is already in, which means connecting external tools and data sources. We're expanding what that looks like. Team features. Right now Onoma is personal. But the same problem exists at team level. How do you share context across a team without everyone starting from zero? Deeper model intelligence. Adaptive routing is v1. We want Onoma to genuinely understand which model is best for which task, not just have preferences, but learn from outcomes.

The roadmap isn't fixed. It's shaped by what users need. That's the advantage of building at this stage: we can move fast and stay close to the people actually using the product.

Why I'm excited about this

I've been building products for over a decade. Some solved problems that mattered. Some didn't.

Onoma feels different. Not because it's technically impressive (though I think it is), but because it solves something I personally experience every day. The frustration of re-explaining context. The inefficiency of platform lock-in. The sense that AI tools should be smarter about continuity.

This is the kind of product I want to use. That's the best signal I know.

We're early. The alpha has rough edges. But the core experience, having AI that remembers you, already works. Everything else is iteration.

If you're someone who uses multiple AI providers, who's frustrated by losing context every time you switch, who wants your AI memory to be yours and not locked in someone else's platform, give Onoma a try.

Hi, I'm Mischa. I've been Shipping products and building ventures for over a decade. First exit at 25, second at 30. Now Partner & CPO at Ryde Ventures, an AI venture studio in Amsterdam. Currently shipping Stagent and Onoma. Based in Hong Kong. I write about what I learn along the way.

Keep reading: Why I built Laravel TOON (and how it saves 60% on LLM tokens).